Overall architecture

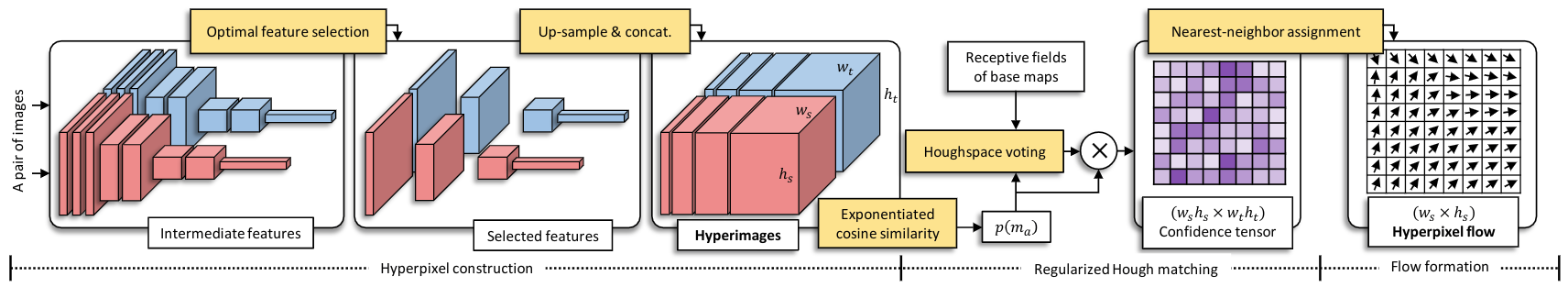

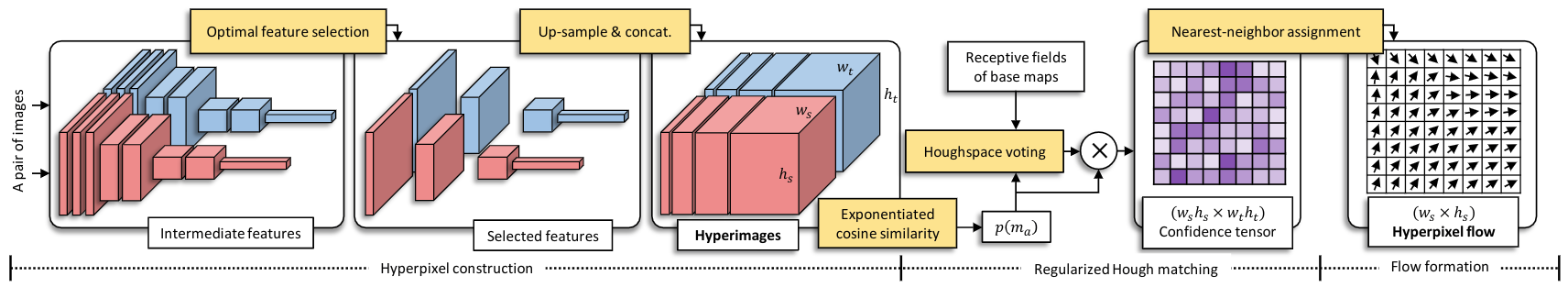

Figure 1. Overall architecture of the proposed method. Hyperpixel flow consists of three main components: hyperpixelconstruction, regularized Hough matching, and flow formation.

| Juhong Min1,2 | Jongmin Lee1,2 | Jean Ponce3,4 | Minsu Cho1,2 | ||||||||||||||||

| 1POSTECH | 2NPRC | 3Inria | 4DI ENS |

Establishing visual correspondences under large intra-class variations requires analyzing images at different levels, from features linked to semantics and context to local patterns, while being invariant to instance-specific details. To tackle these challenges, we represent images by "hyperpixels" that leverage a small number of relevant features selected among early to late layers of a convolutional neural network. Taking advantage of the condensed features of hyperpixels, we develop an effective real-time matching algorithm based on Hough geometric voting. The proposed method, hyperpixel flow, sets a new state of the art on three standard benchmarks as well as a new dataset, SPair-71k, which contains a significantly larger number of image pairs than existing datasets, with more accurate and richer annotations for in-depth analysis.

Figure 1. Overall architecture of the proposed method. Hyperpixel flow consists of three main components: hyperpixelconstruction, regularized Hough matching, and flow formation.

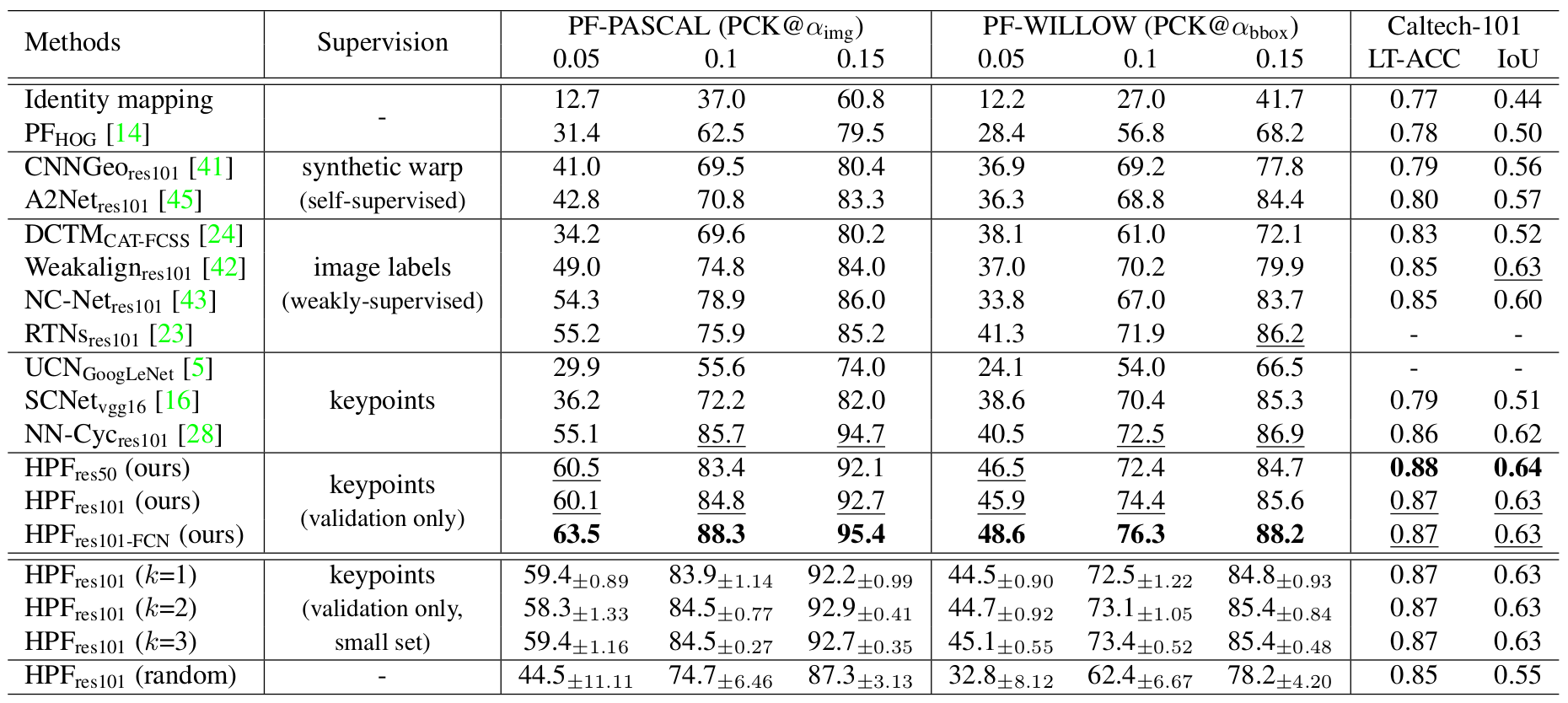

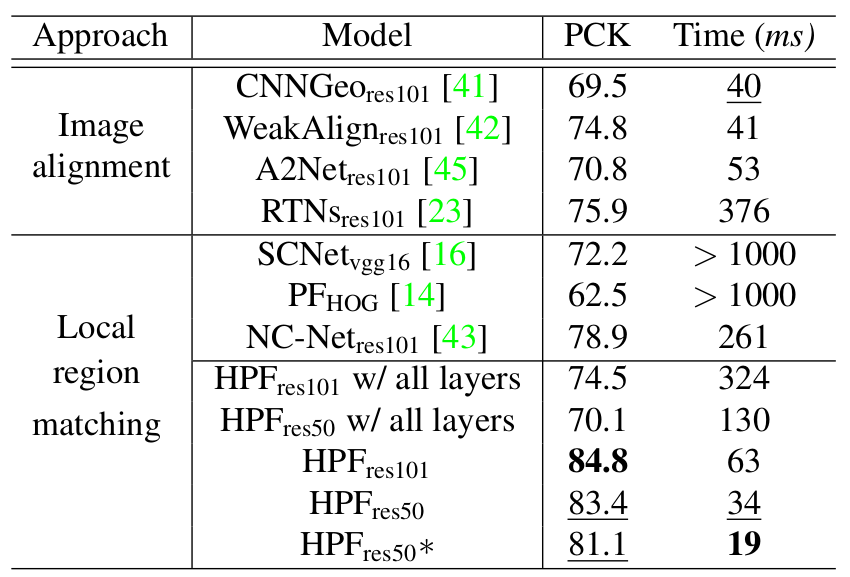

Table 1. Results on standard benchmarks of semantic correspondences. Subscripts of the method names indicate backbone networks used. The second column denotes supervisory information used for training or tuning. Numbers in bold indicate the best performance and underlined ones are the second and third best. Results of [14,16,24,41,42] are borrowed from [23].

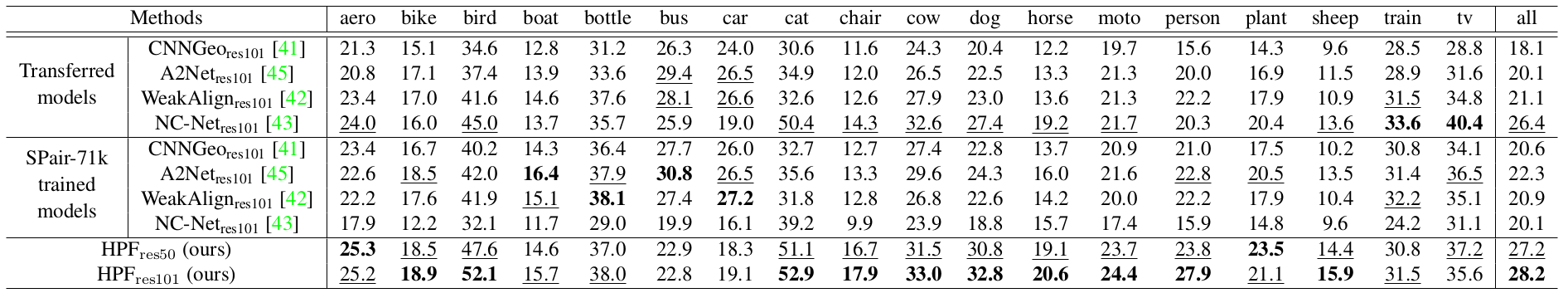

Table 2. Per-class PCK results on SPair-71k dataset. For transferred model, the original models trained on PASCAL-VOC and PF-PASCAL, which are provided by the authors, are used for evaluation. Note that, for SPair-71k trained models, the transferred models are further finetuned on SPair-71k dataset by ourselves with our best efforts. Numbers in bold indicate the best performance and underlined ones are the second and third best.

* Benchmark datasets are available at [SPair-71k] [PF-PASCAL] [PF-WILLOW] [Caltech-101]

Table 3. Inference time comparison on PF-PASCAL bench-mark. Hyperpixel layers of HPFres50∗are (4,7,11,12,13).

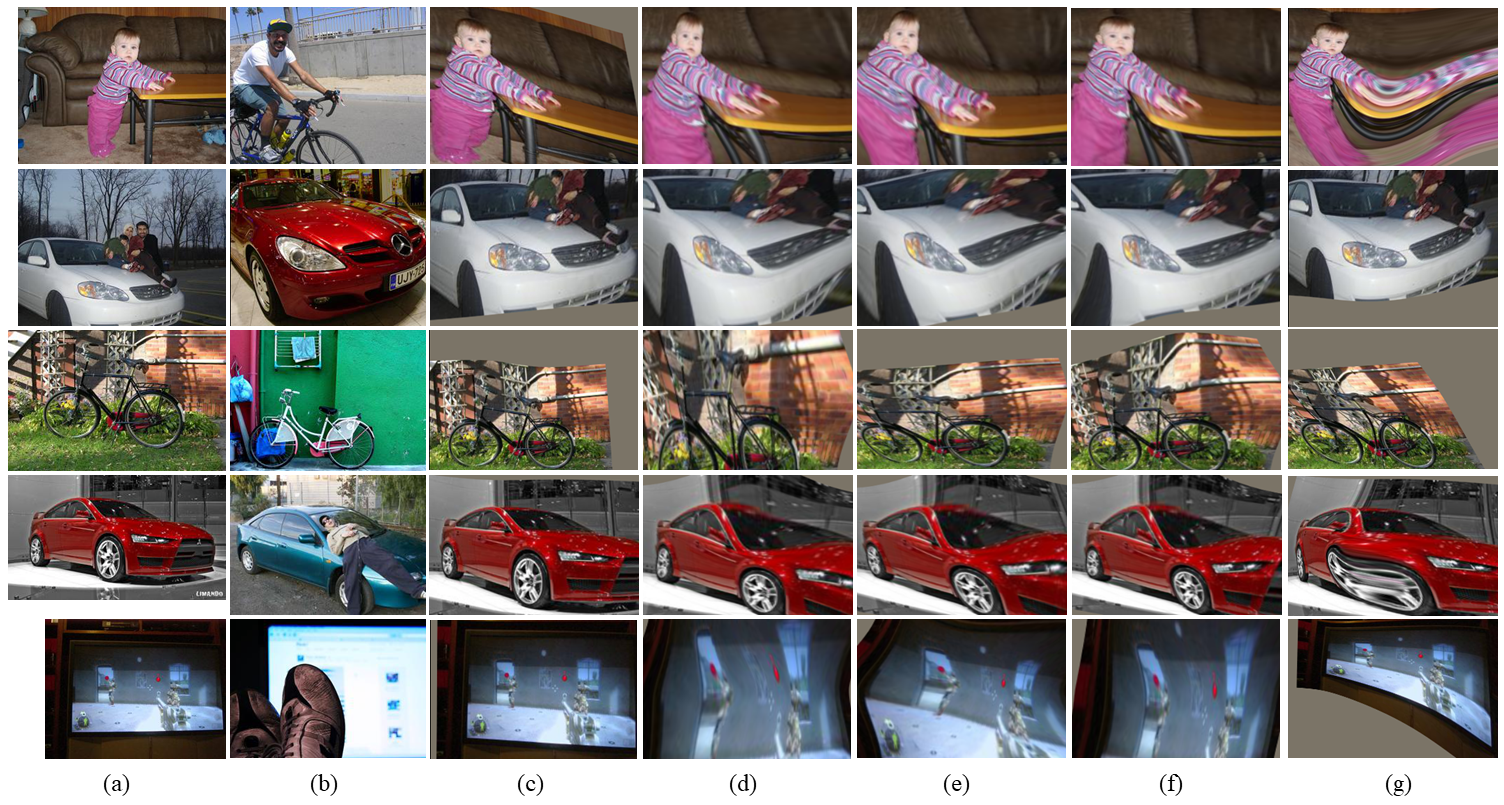

Figure 2. Qualitative results on PF-PASCAL dataset: (a) source image, (b) target image, (c) Hyperpixel Flow (ours), (d) CNNGeo [5], (e) A2Net [8],(f) WeakAlign [6] and (g) NC-Net [7]. The source images are warped to the target images using correspondences.

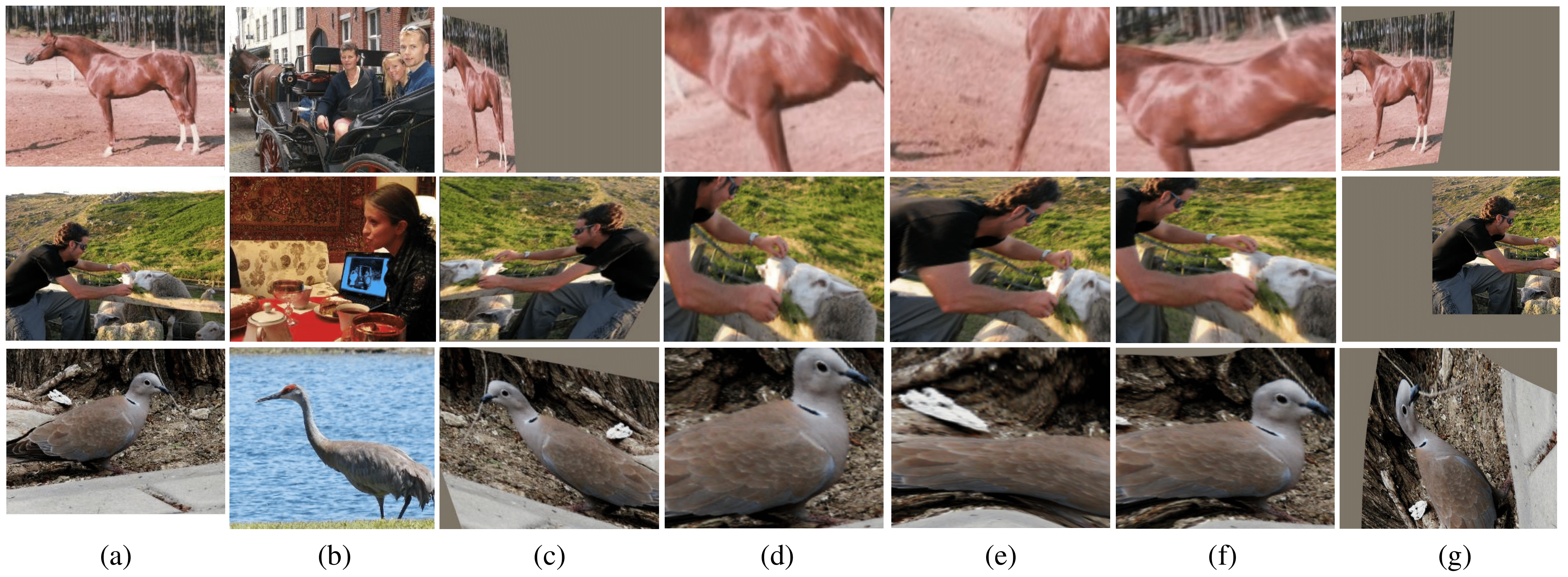

Figure 3. Qualitative results on SPair-71k benchmark: (a) source image, (b) target image, (c) Hyperpixel Flow (ours), (d) CN-NGeo [37], (e) A2Net [41], (f) WeakAlign [38], and (g) NC-Net [39]. The source images are either affine or TPS transformed to target images using correspondences.

Check our GitHub repository: [github]