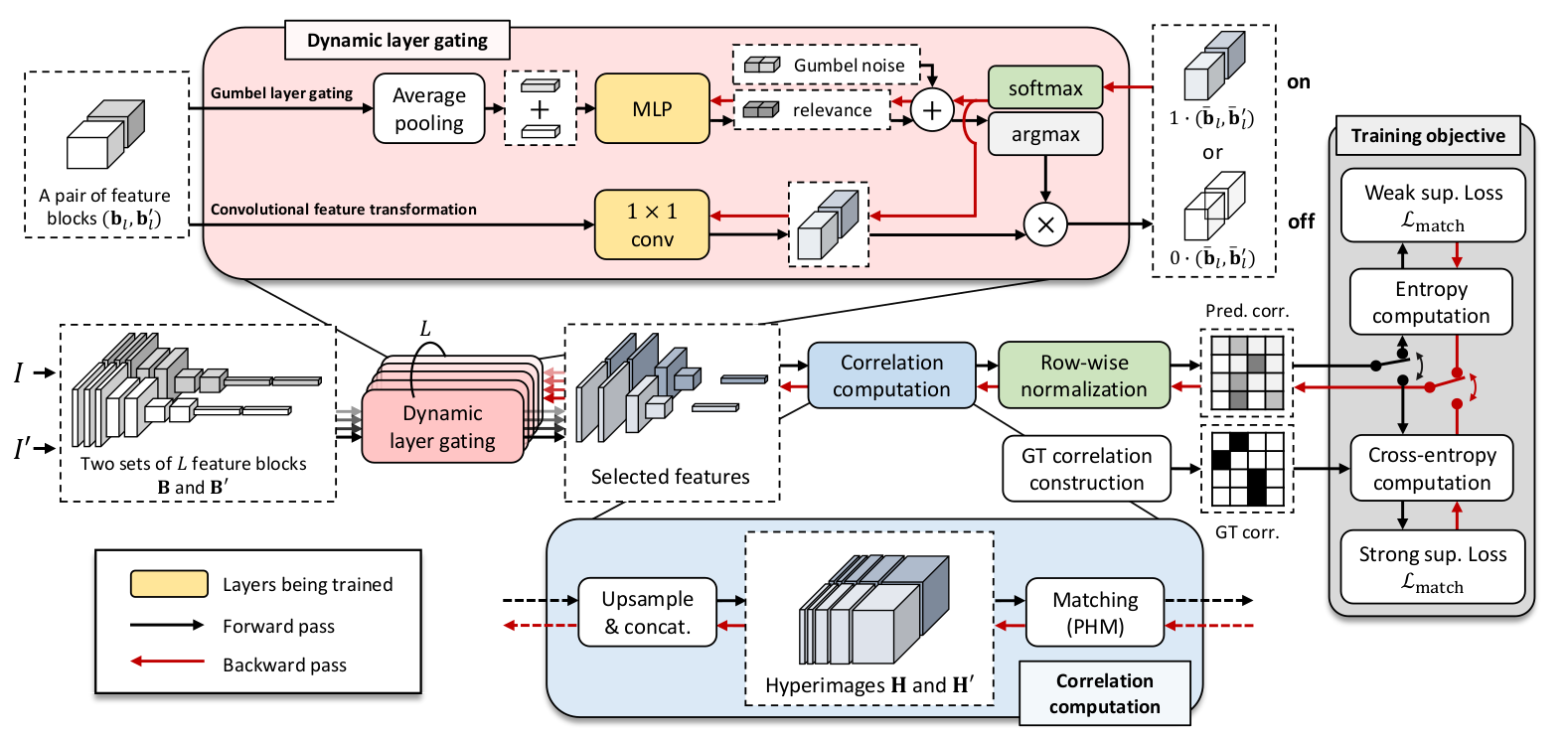

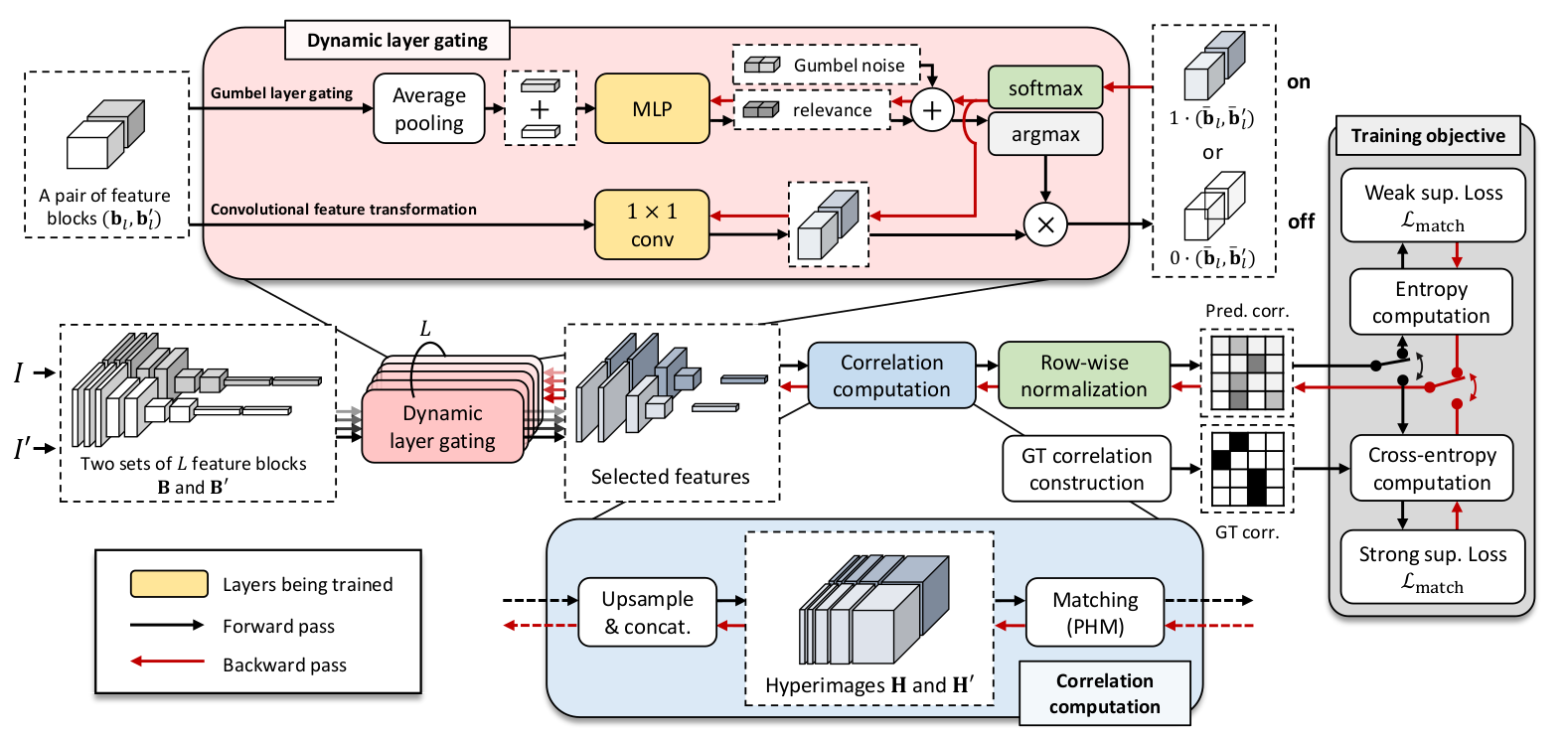

Overall architecture

Figure 1. The overall architecture of Dynamic Hyperpixel Flow (DHPF).

| Juhong Min1,2 | Jongmin Lee1,2 | Jean Ponce3,4 | Minsu Cho1,2 | ||||||||||||||||

| 1POSTECH | 2NPRC | 3Inria | 4ENS |

Feature representation plays a crucial role in visual correspondence, and recent methods for image matching resort to deeply stacked convolutional layers. These models, however, are both monolithic and static in the sense that they typically use a specific level of features, e.g., the output of the last layer, and adhere to it regardless of the images to match. In this work, we introduce a novel approach to visual correspondence that dynamically composes effective features by leveraging relevant layers conditioned on the images to match. Inspired by both multi-layer feature composition in object detection and adaptive inference architectures in classification, the proposed method, dubbed Dynamic Hyperpixel Flow, learns to compose hypercolumn features on the fly by selecting a small number of relevant layers from a deep convolutional neural network. We demonstrate the effectiveness on the task of semantic correspondence, i.e., establishing correspondences between images depicting different instances of the same object or scene category. Experiments on standard benchmarks show that the proposed method greatly improves matching performance over the state of the art in an adaptive and efficient manner.

Figure 1. The overall architecture of Dynamic Hyperpixel Flow (DHPF).

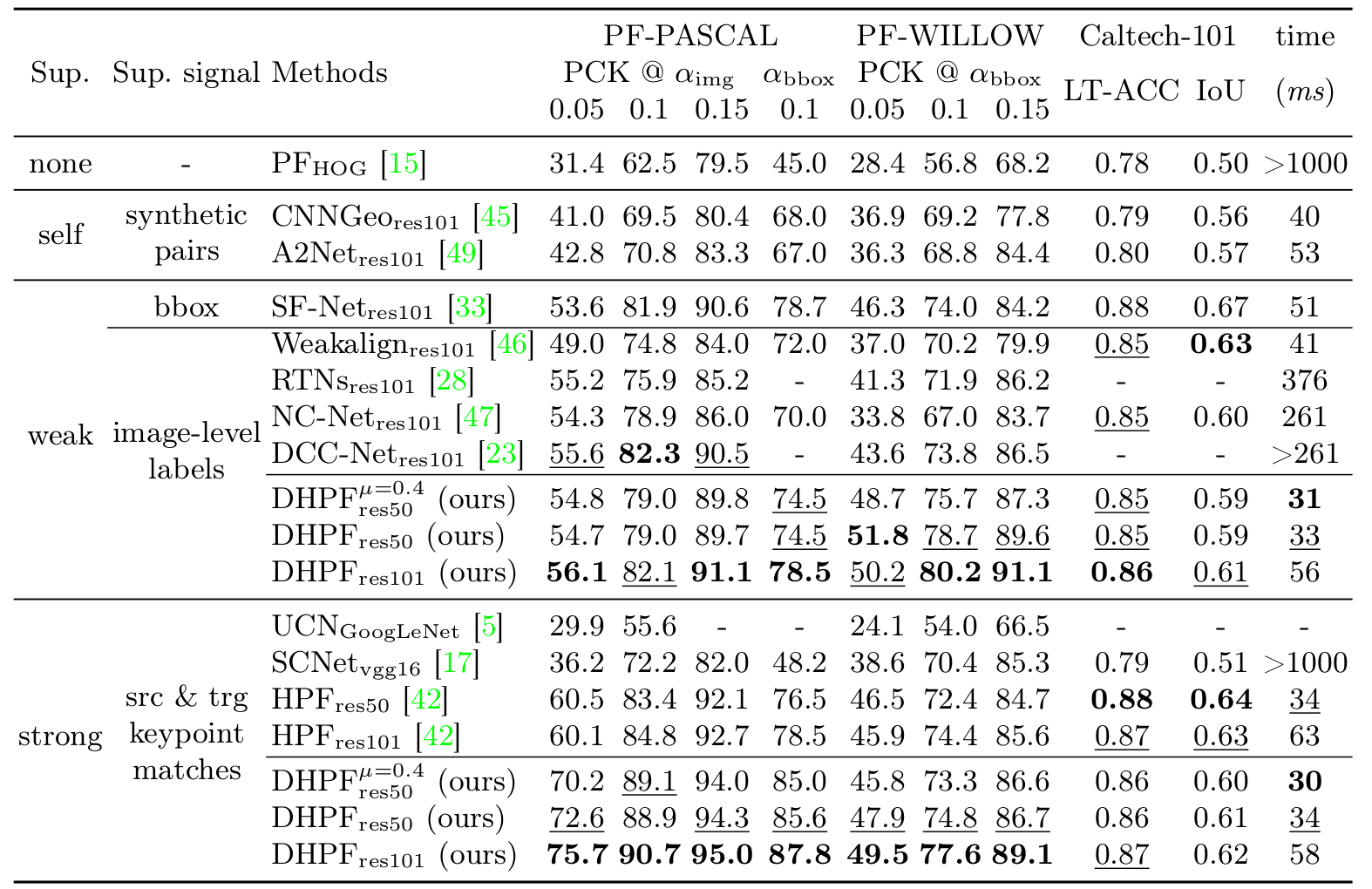

Table 1. Performance on standard benchmarks in accuracy and speed (avg. time per pair). The subscript of each method name denotes its feature extractor. Some results are from [25,28,33,42]. Numbers in bold indicate the best performance and underlined ones are the second best. The average inference time (the last column) is measured on test split of PF-PASCAL [16] and includes all the pipelines of the models: from feature extraction to keypoint prediction.

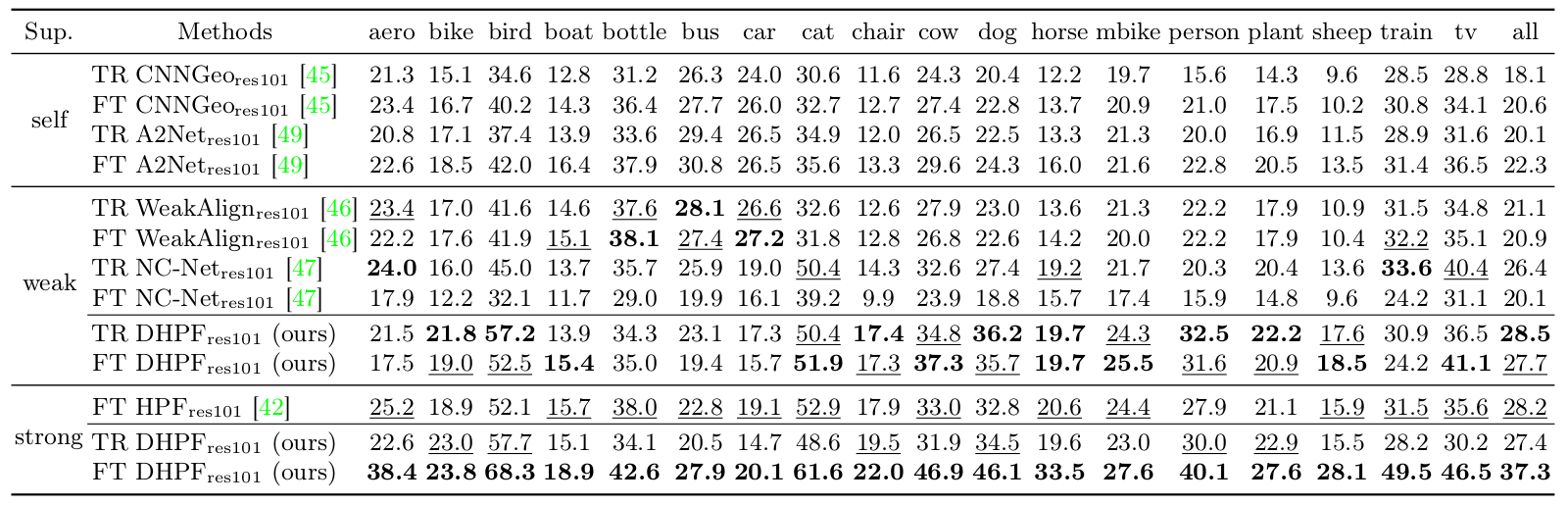

Table 2. Performance on SPair-71k dataset in accuracy (per-class PCK with αbbox=0.1). TR represents transferred models trained on PF-PASCAL while FT denotes fine-tuned (trained) models on SPair-71k.

* Benchmark datasets are available at [SPair-71k] [PF-PASCAL] [PF-WILLOW] [Caltech-101]

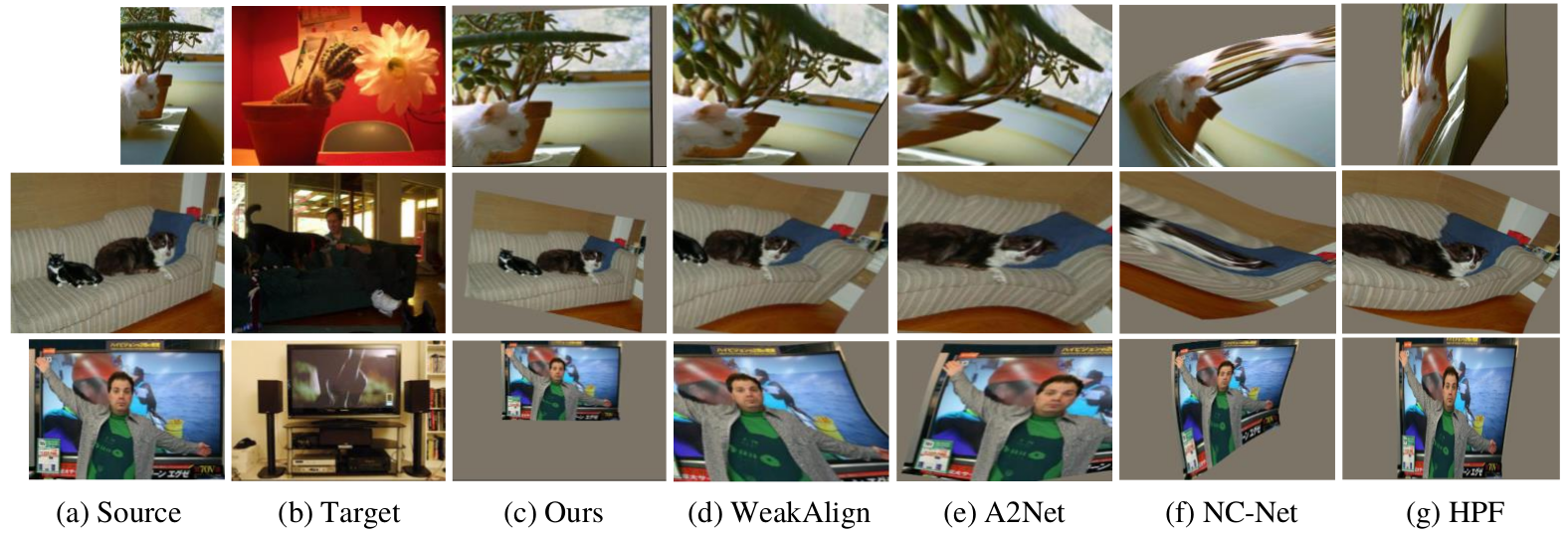

Figure 1. Example results on PF-PASCAL [16]: (a) source image, (b) target image and (c) ours, (d) WeakAlign [46], (e) A2Net [49], (f) NC-Net [47], and (g) HPF [42].

Check our GitHub repository: [github]