Abstract

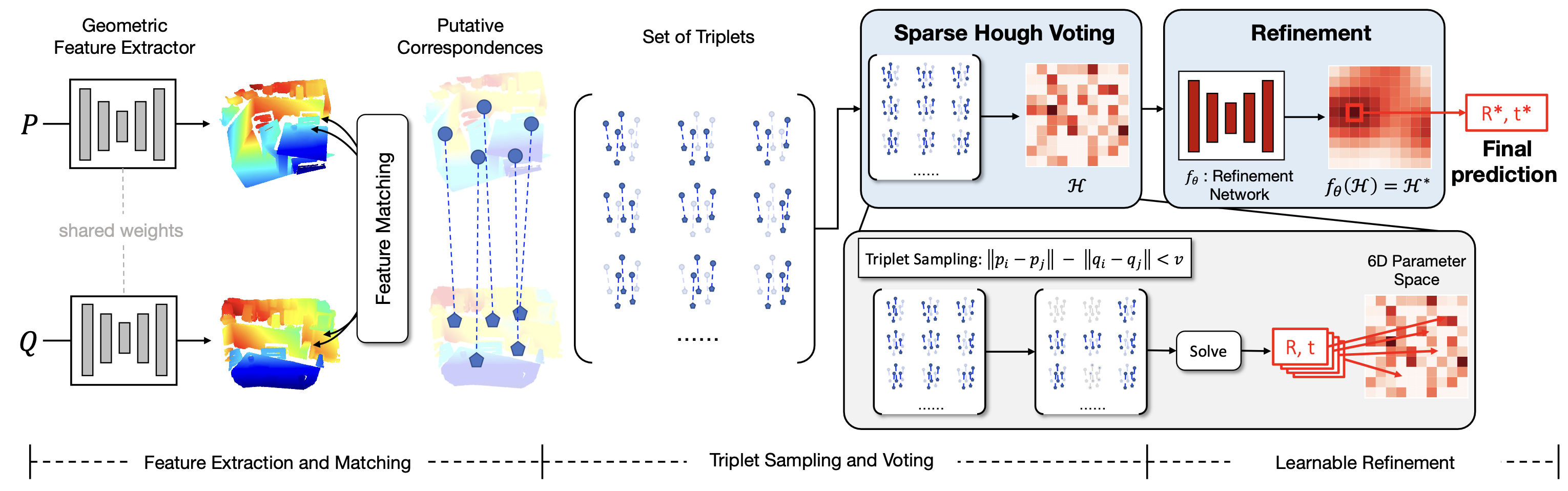

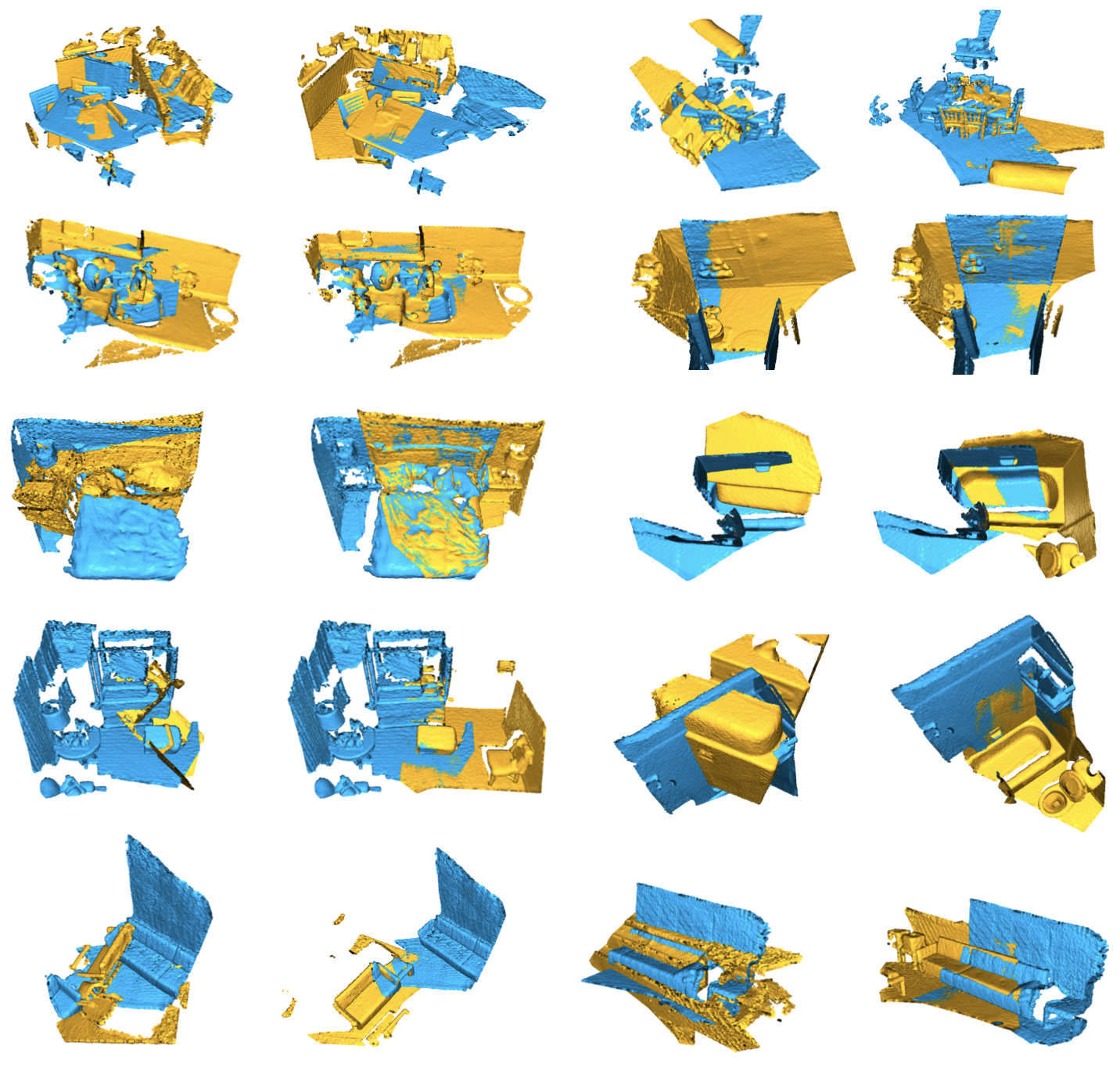

Point cloud registration is the task of estimating the rigid transformation that aligns a

pair of point cloud fragments. We present an efficient and robust framework for pairwise

registration of real-world 3D scans, leveraging Hough voting in the 6D transformation

parameter space. First, deep geometric features are extracted from a point cloud pair to

compute putative correspondences. We then construct a set of triplets of correspondences

to cast votes on the 6D Hough space, which represents the transformation parameters in the

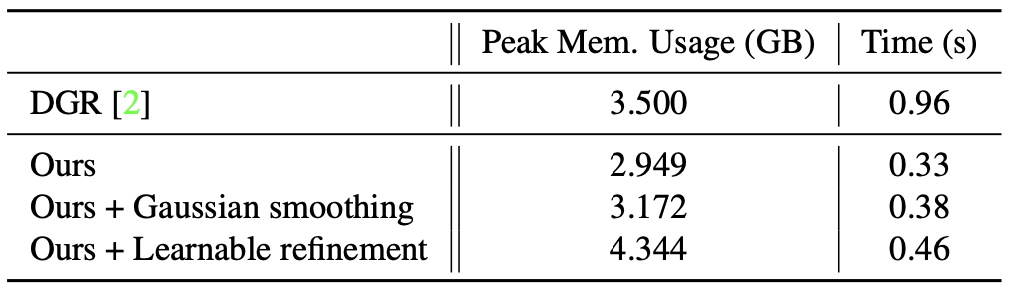

form of sparse tensors. Next, a fully convolutional refinement module is applied to refine

the noisy votes. Finally, we identify the consensus among the correspondences from the

Hough space, which we use to predict our final transformation parameters. Our method

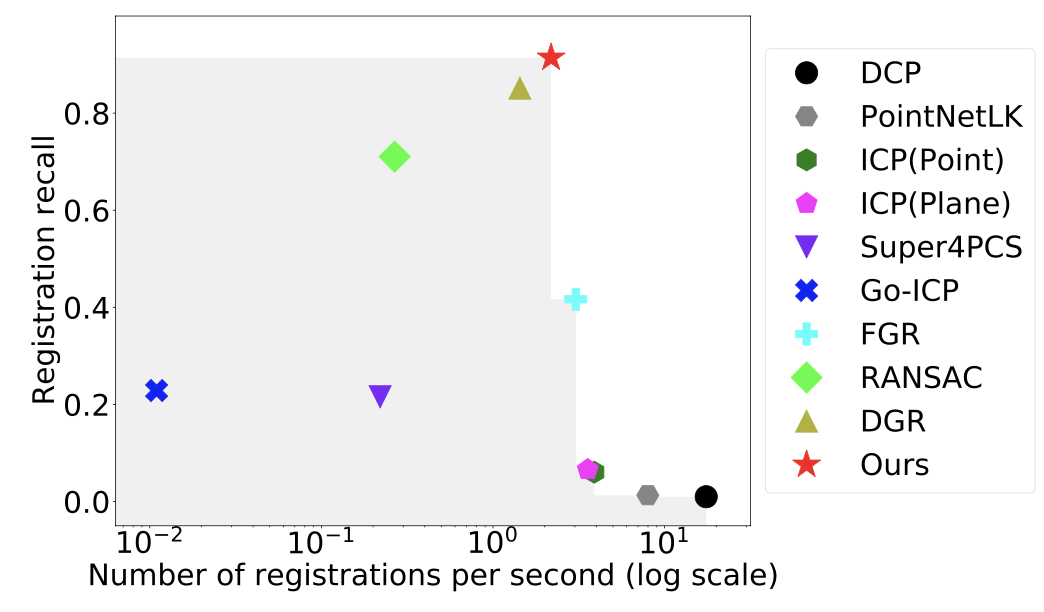

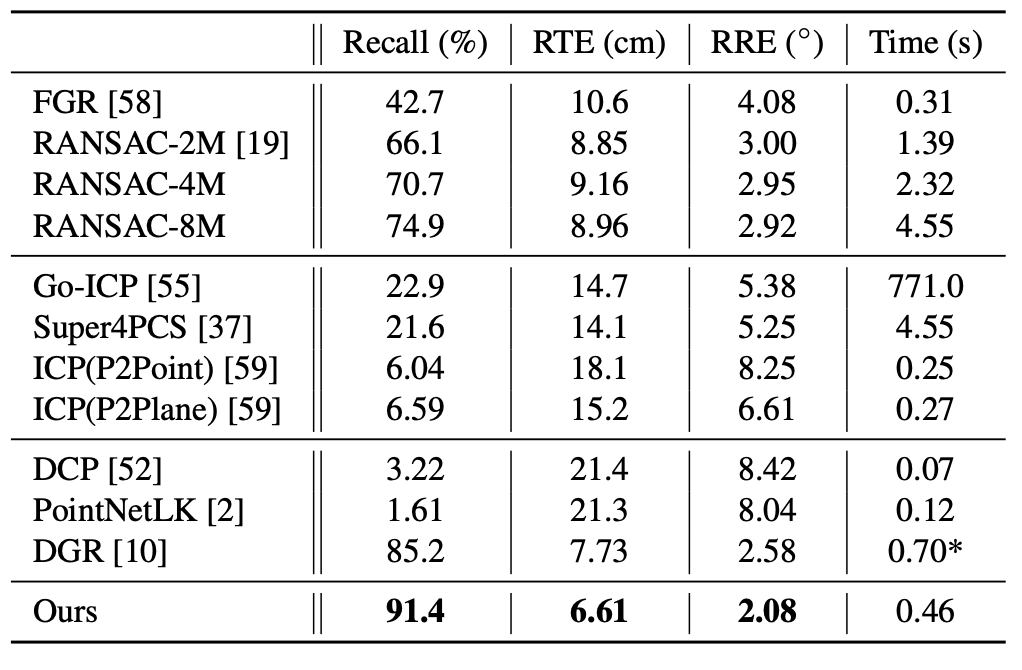

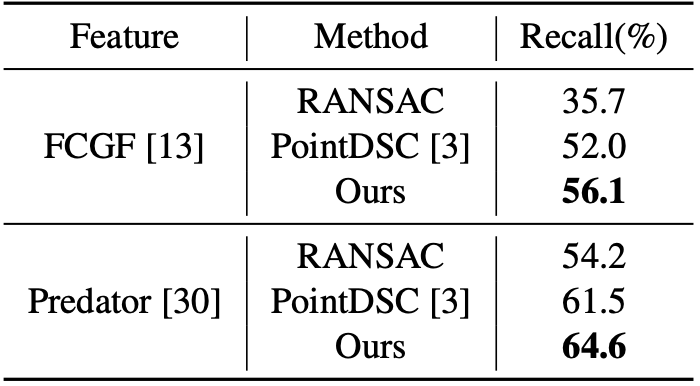

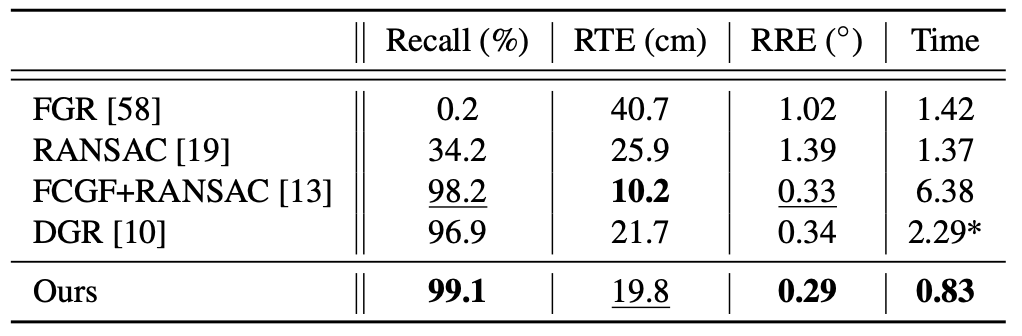

outperforms state-of-the-art methods on the 3DMatch and 3DLoMatch benchmarks, while

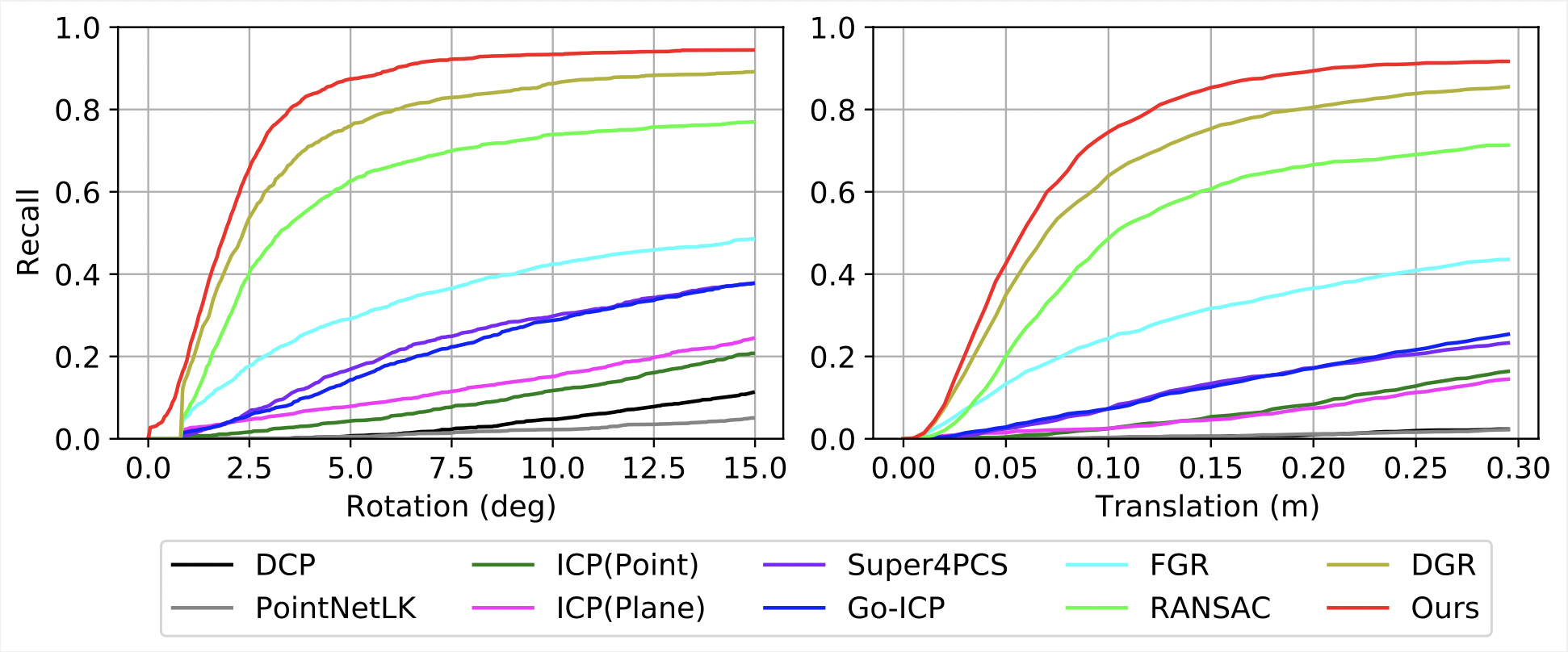

achieving comparable performance on the KITTI odometry dataset. We further demonstrate the

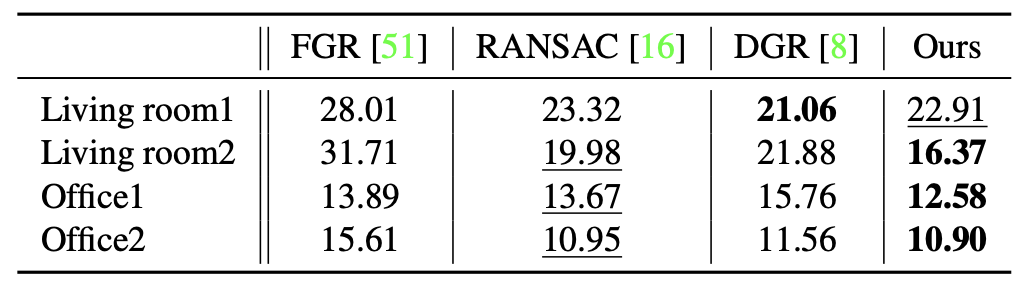

generalizability of our approach by setting a new state of the art on the ICL-NUIM

dataset, where we integrate our module into a multi-way registration pipeline.