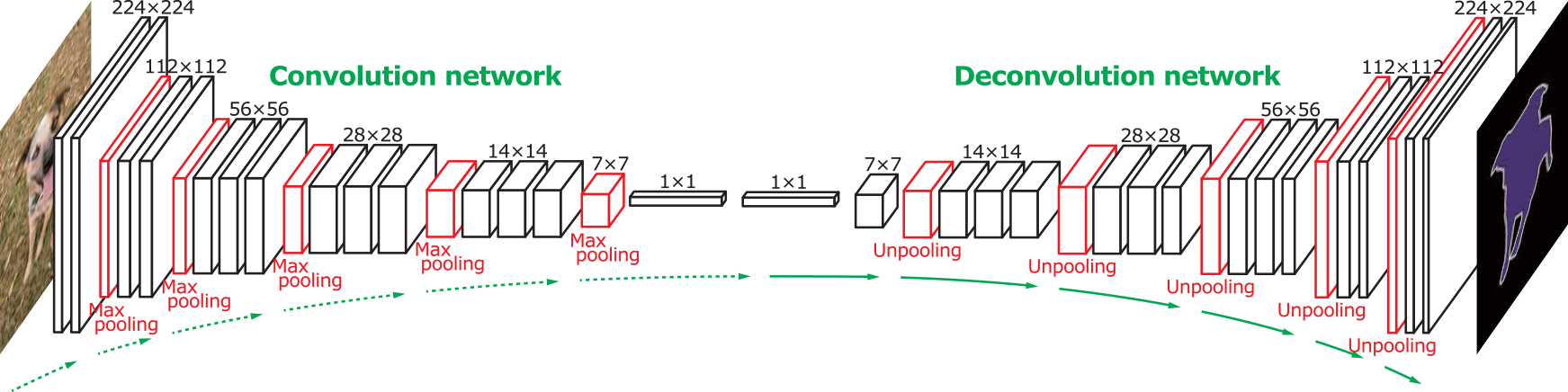

Figure 1. Overall architecture of the proposed network. On top of the convolution network based on VGG 16-layer net, we put a multilayer deconvolution network to generate the accurate segmentation map of an input proposal. Given a feature representation obtained from the convolution network, dense pixel-wise class prediction map is constructed through multiple series of unpooling, deconvolution and rectification operations.